Clone, share and modify data pipeline JSON definitions

Take advantage of data pipelines JSON definitions

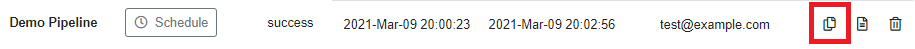

Each pipeline's definition is stored as a JSON object. Pipeline definitions are designed to be human-readable and can be exported by clicking the Export button in pipeline details view.

Valid definitions are required to have two nodes: datasets and operations.

{

"datasets":{},

"operations":[]

}For example the following definition loads the user table from the already existing MyDBConn database connection and applies a filter operation. Pipeline definitions are decoupled from data connections. Connection parameters are not stored in and cannot be passed in via definitions. Each connection first needs to be added as normal under 'Data connections'.

{

"datasets": {

"user": {

"loc": "user",

"jdbcDatasourceName": "MyDBConn"

}

},

"operations": [

{

"dataset": "user",

"type": "filter",

"condition": "enabled = false"

},

{

"dataset": "user",

"type": "show",

"count": "5",

"count_total": false,

"truncate_values": false

}

]

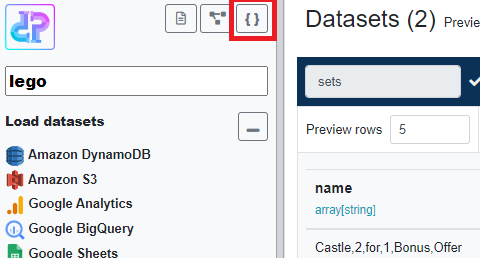

}The type of connection is inferred from the jdbcDatasourceName property. For example, if MyDBConn was a DynamoDB connection, the property name would be dynamodbDatasourceName. To find the property name for any type of connection, load a table and look at the pipeline definition.

Use cases

Environment switching

Since data connections are identified by name, it is possible to create a 'test' pipeline using a small amount of data then create a new 'production' pipeline and update the data connection names accordingly. This is the recommended way to create data pipelines to reduce cost and execution time while designing the pipeline.

Backup

Pipelines can be backed up by exporting their definition. Definitions can be imported via the 'Add Pipeline -> Import definition' option.

Modification

Advanced users can modify a pipeline's definition manually. Careful, you may end up with an invalid definition.

Sharing

Pipeline definitions can be shared. Note that data connections with the same name must exist when importing from definition.

Cloning / Duplication

Rather than having to manually create multiple similar pipelines, cloning is possible either via Export / Import of a definition or by using the convenience function by clicking the 'Duplicate' button in Dashboard or Project view.